Activation Functions: Let’s see new activation functions

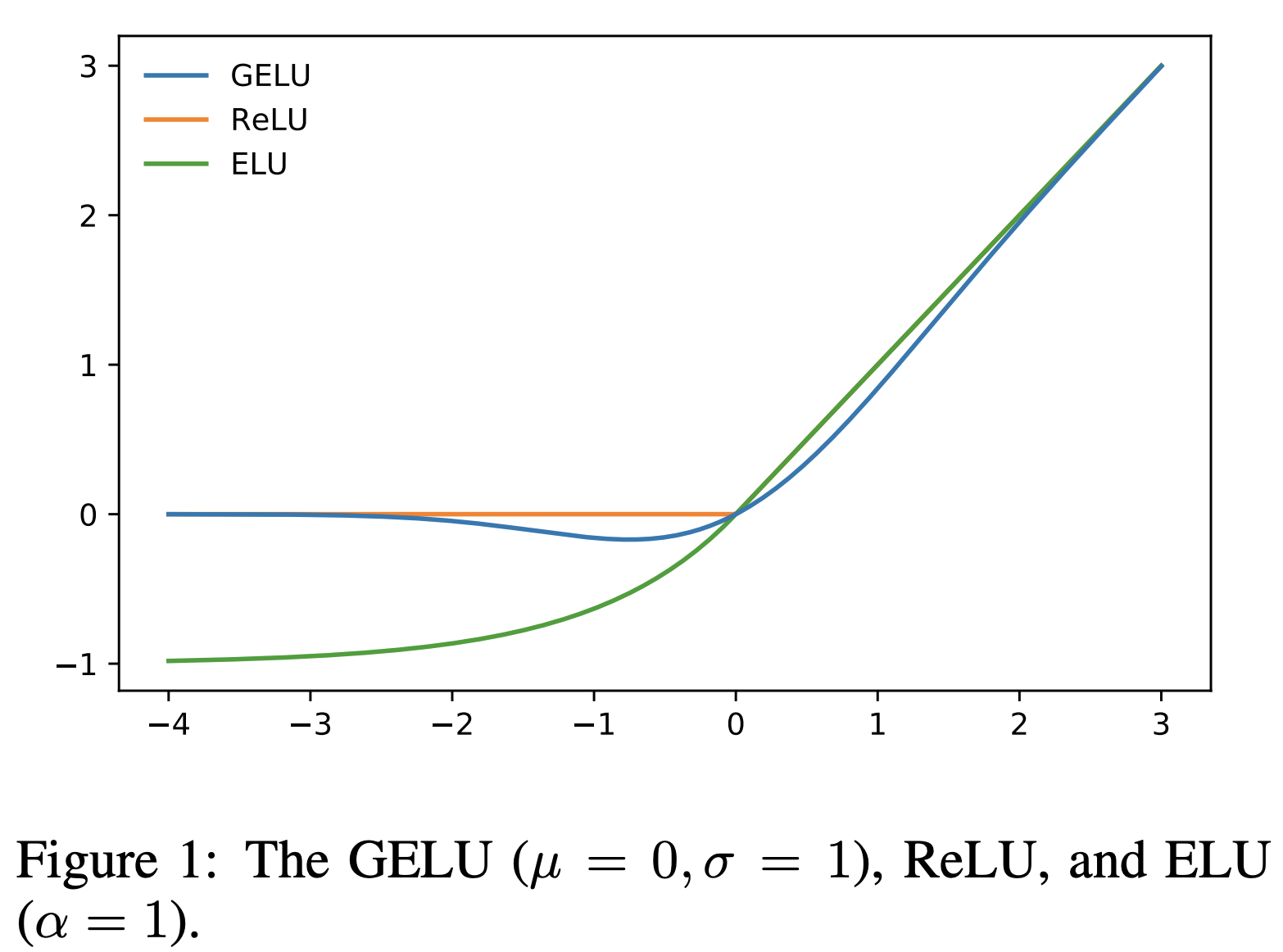

GELU (Gaussian Error Linear Unit)

The GELU nonlinearity is the expected transformation of a stochastic regularizer which randomly applies the identity or zero map to a neuron’s input

1. Equation:

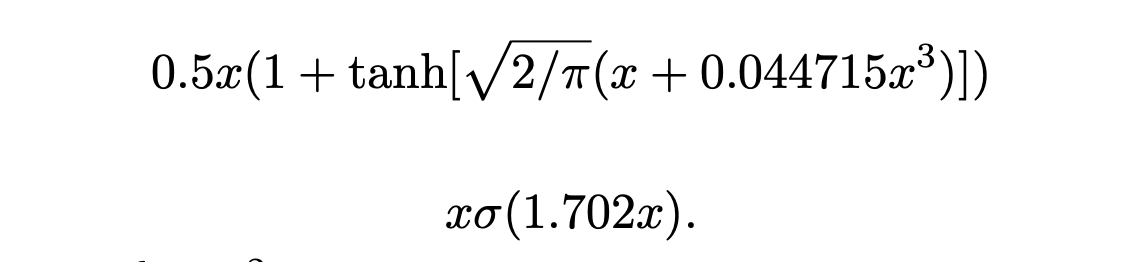

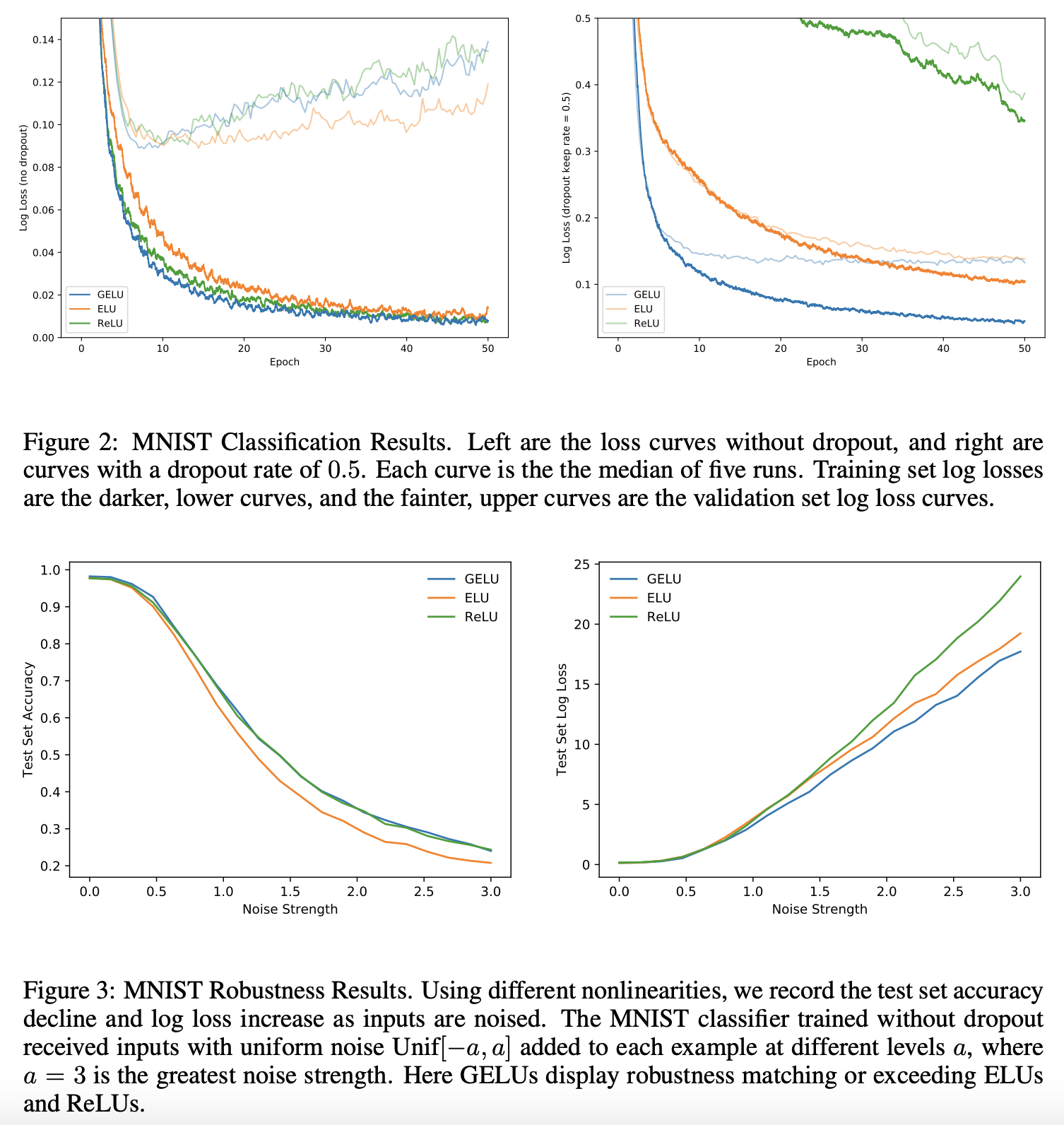

2. GELU Experiments

Classfication Experiment: MNIST classification

Autoencoder Experiment: MNIST Autoencoder

Reference:

https://arxiv.org/pdf/1606.08415.pdf https://github.com/hendrycks/GELUs

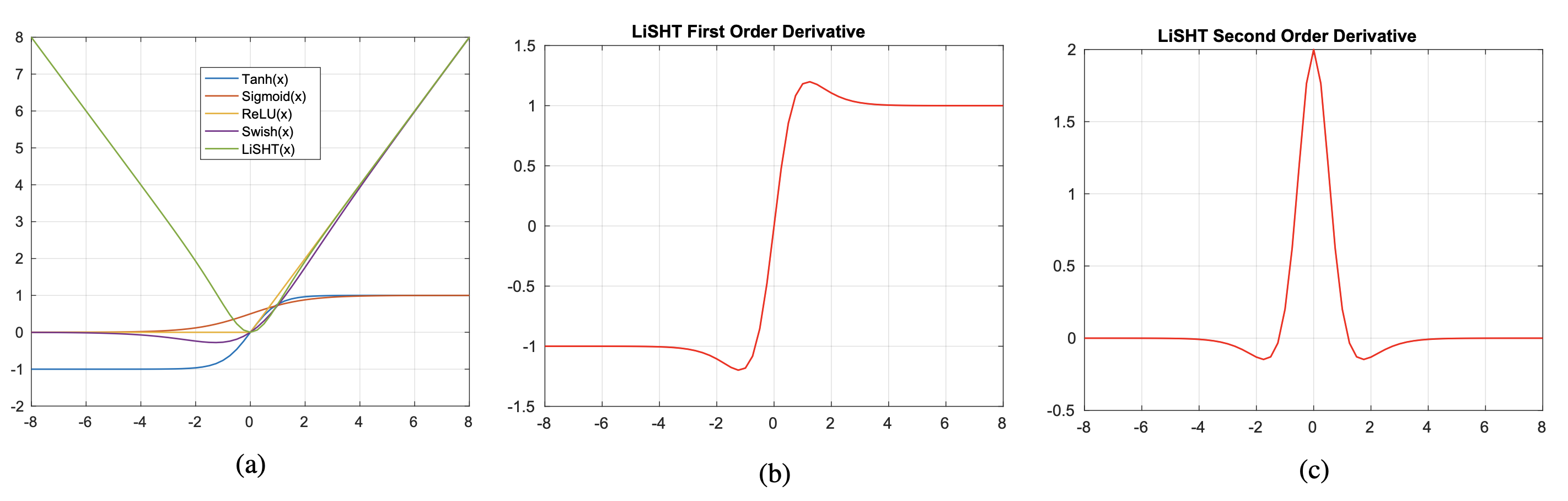

LiSHT (Linearly Scaled Hyperbolic Tangent Activation)

1. Equation:

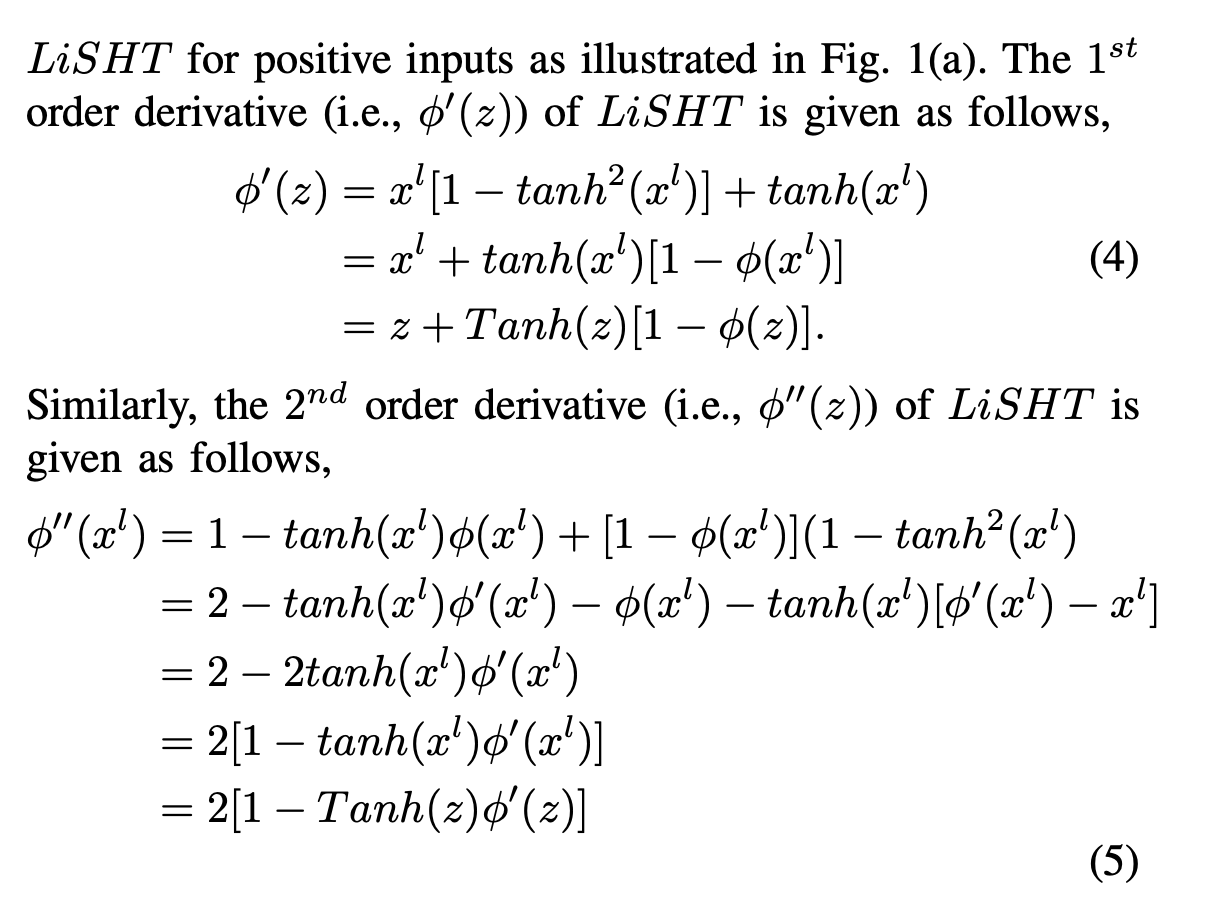

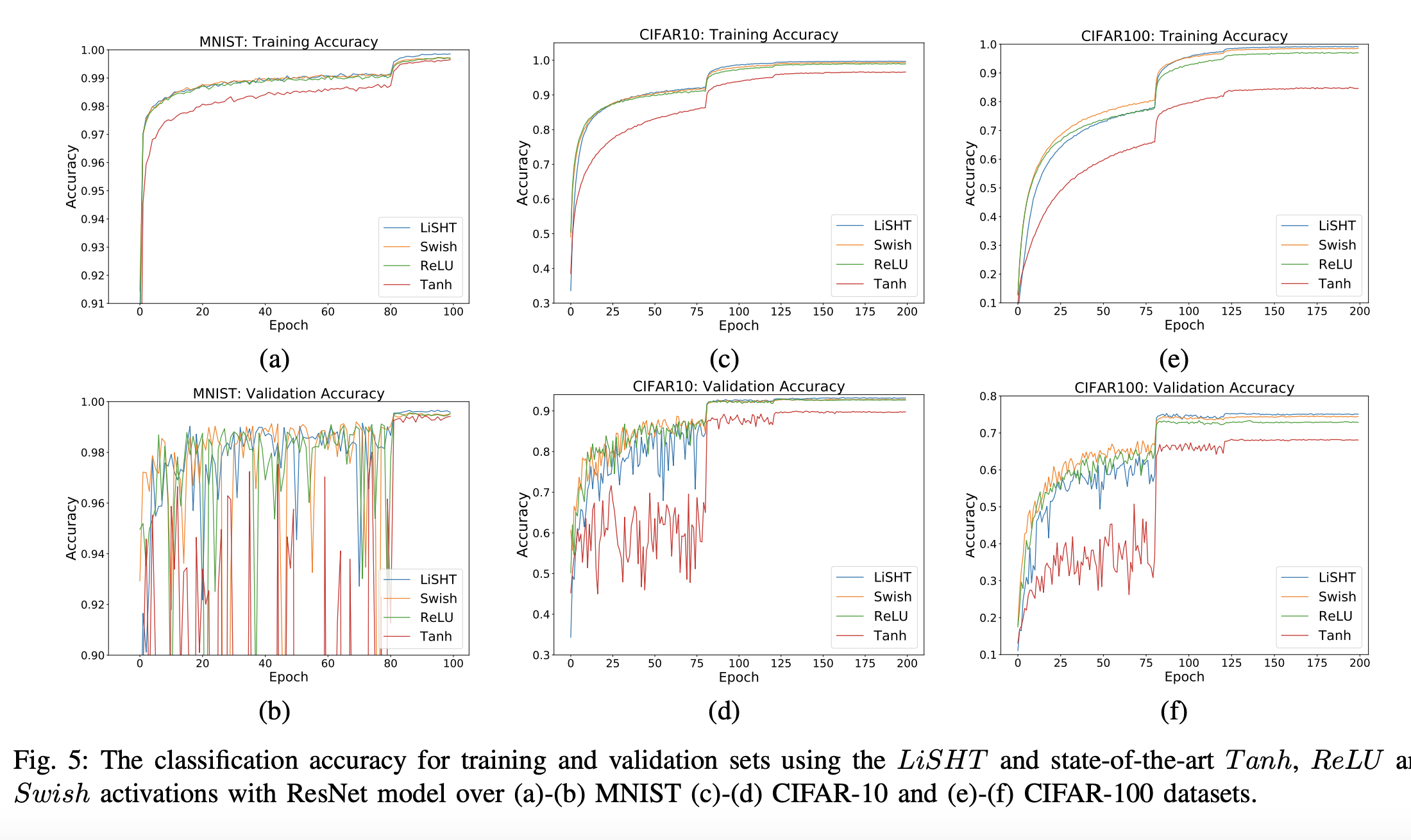

2. LiSHT Experiments

Classification Experiment: MNIST & CIFAR10

Sentiment Classification Results using LSTM

Reference

https://arxiv.org/pdf/1901.05894.pdf

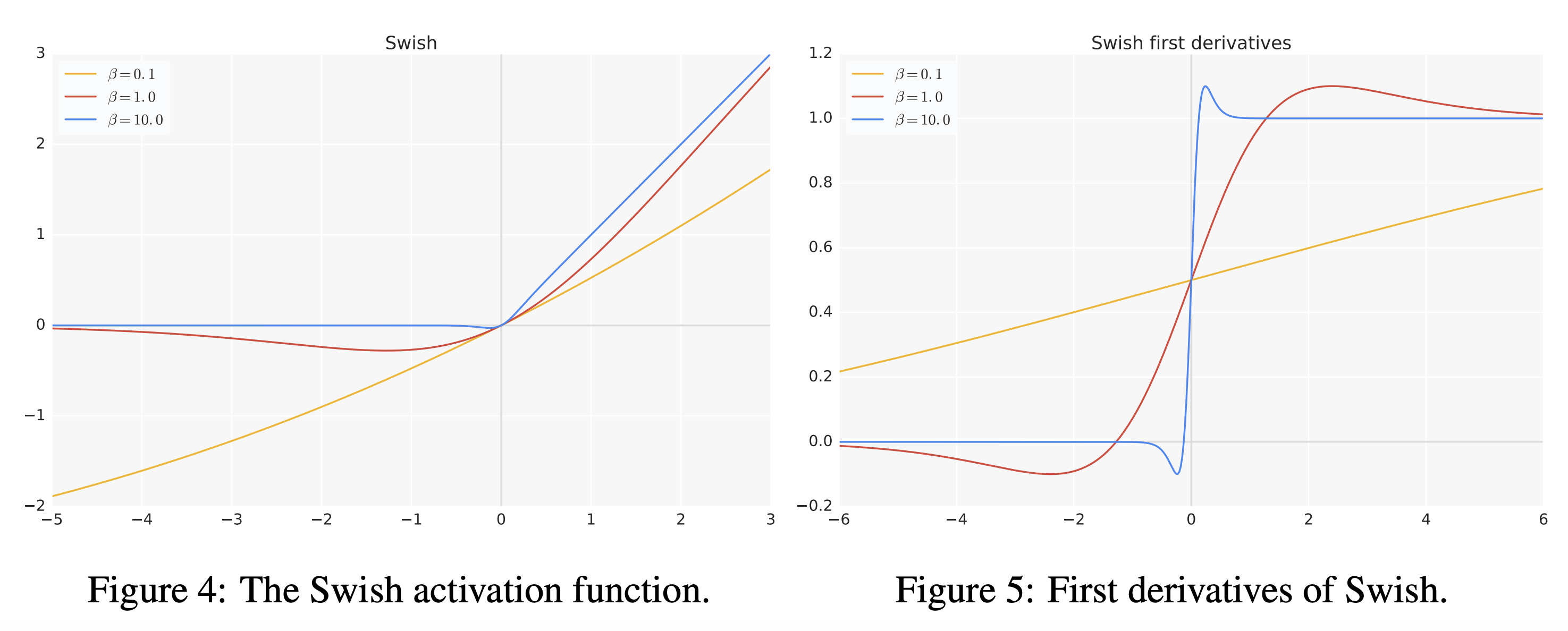

SWISH

1. Equation:

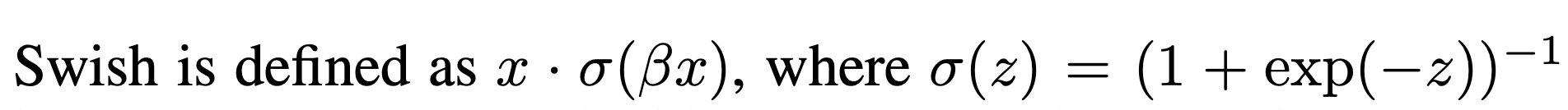

2. SWISH Experiments

Machine Translation

Reference

https://arxiv.org/pdf/1710.05941.pdf

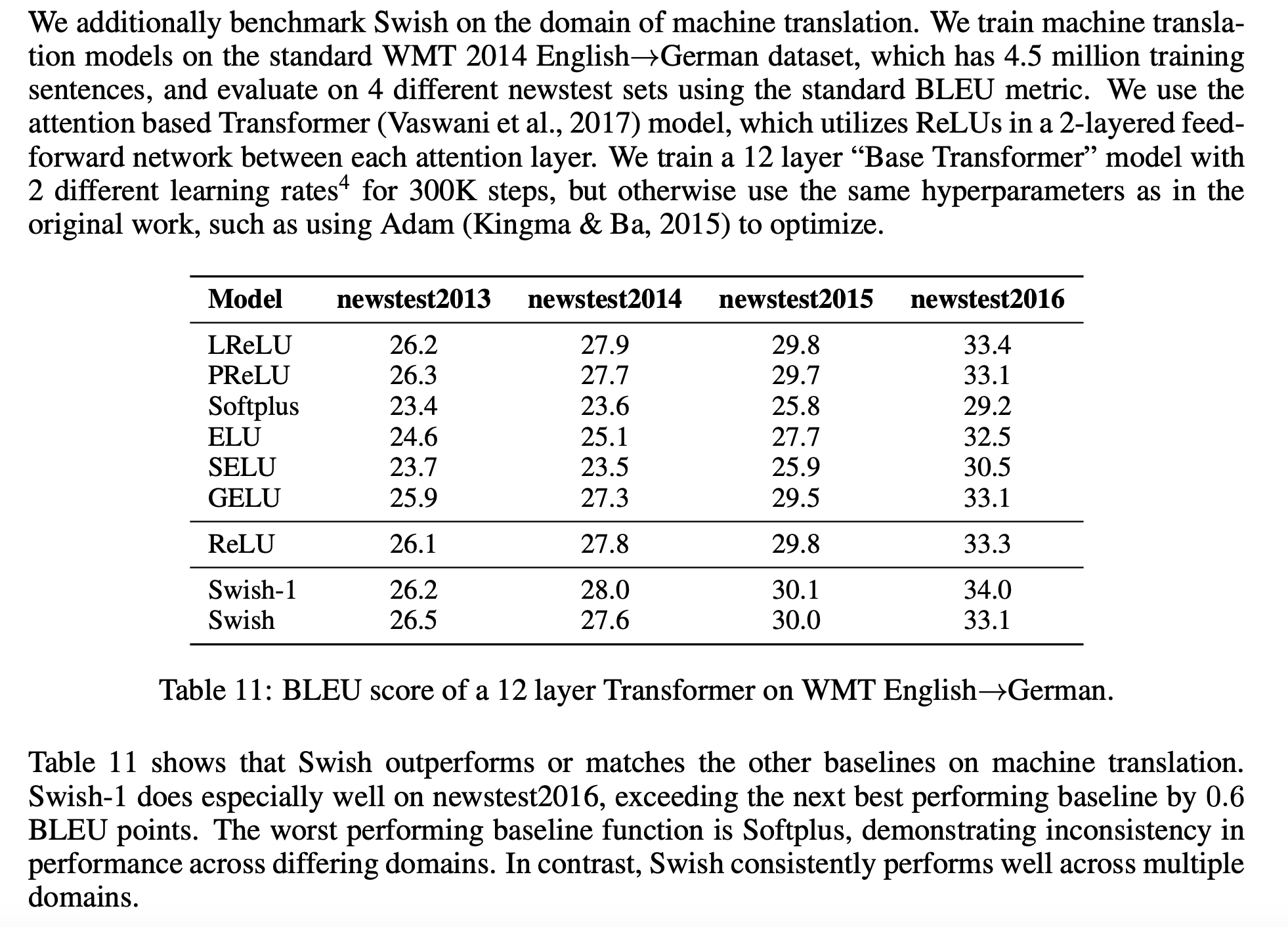

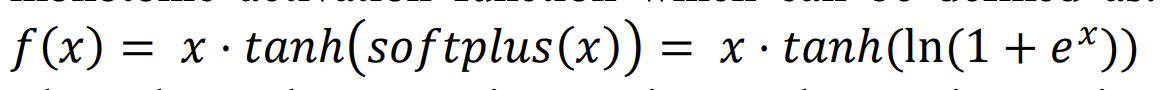

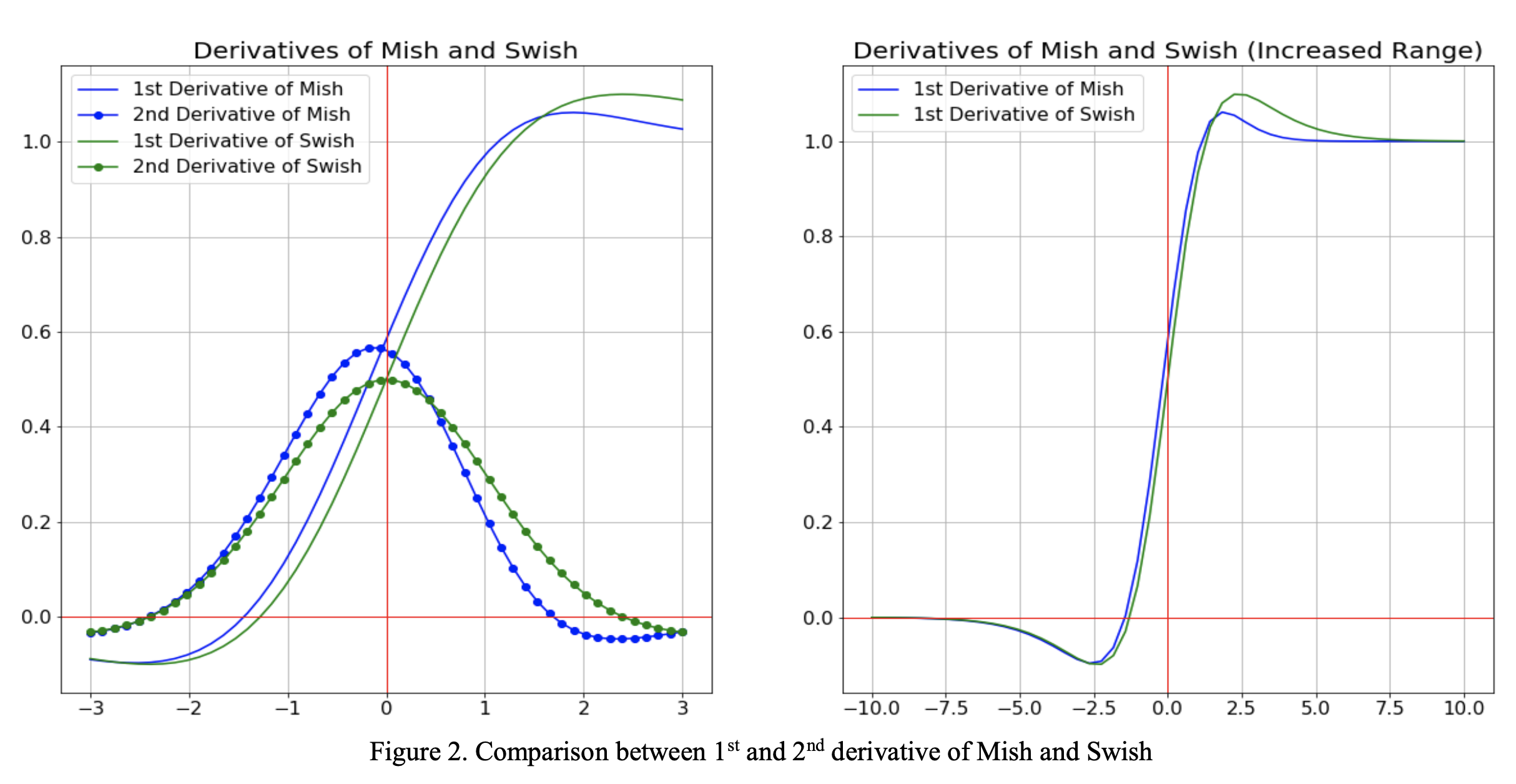

Mish

1. Equation:

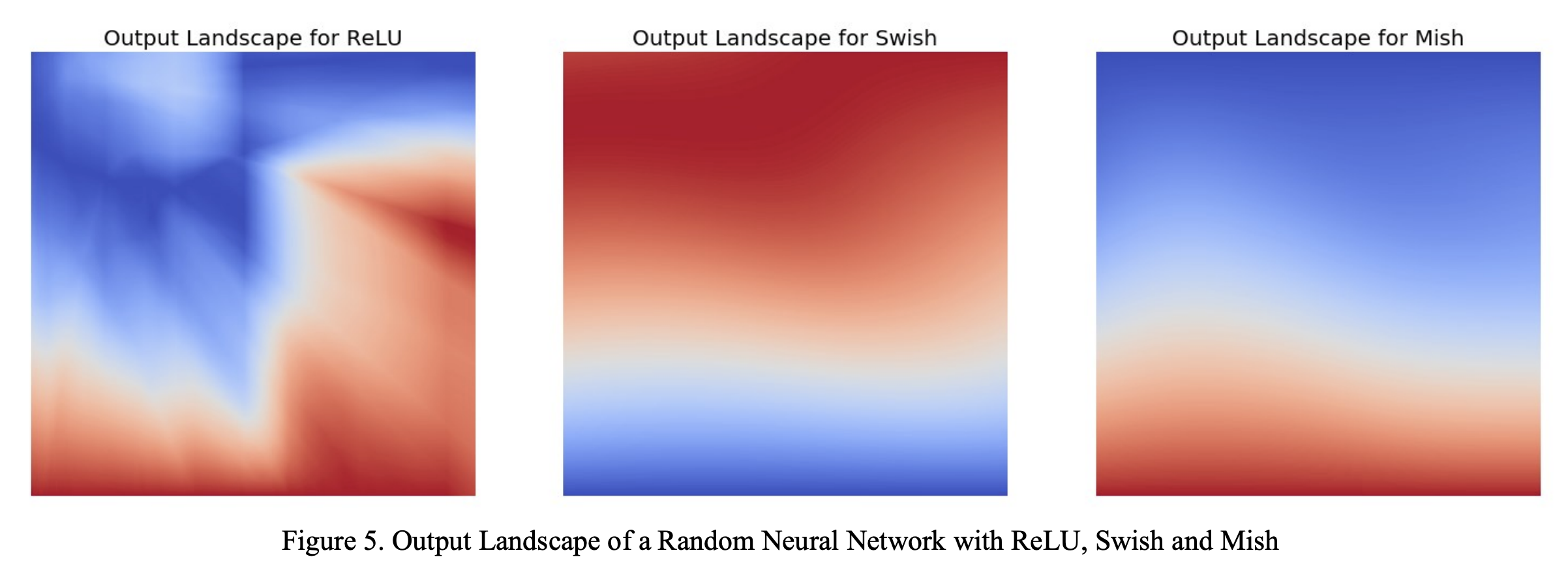

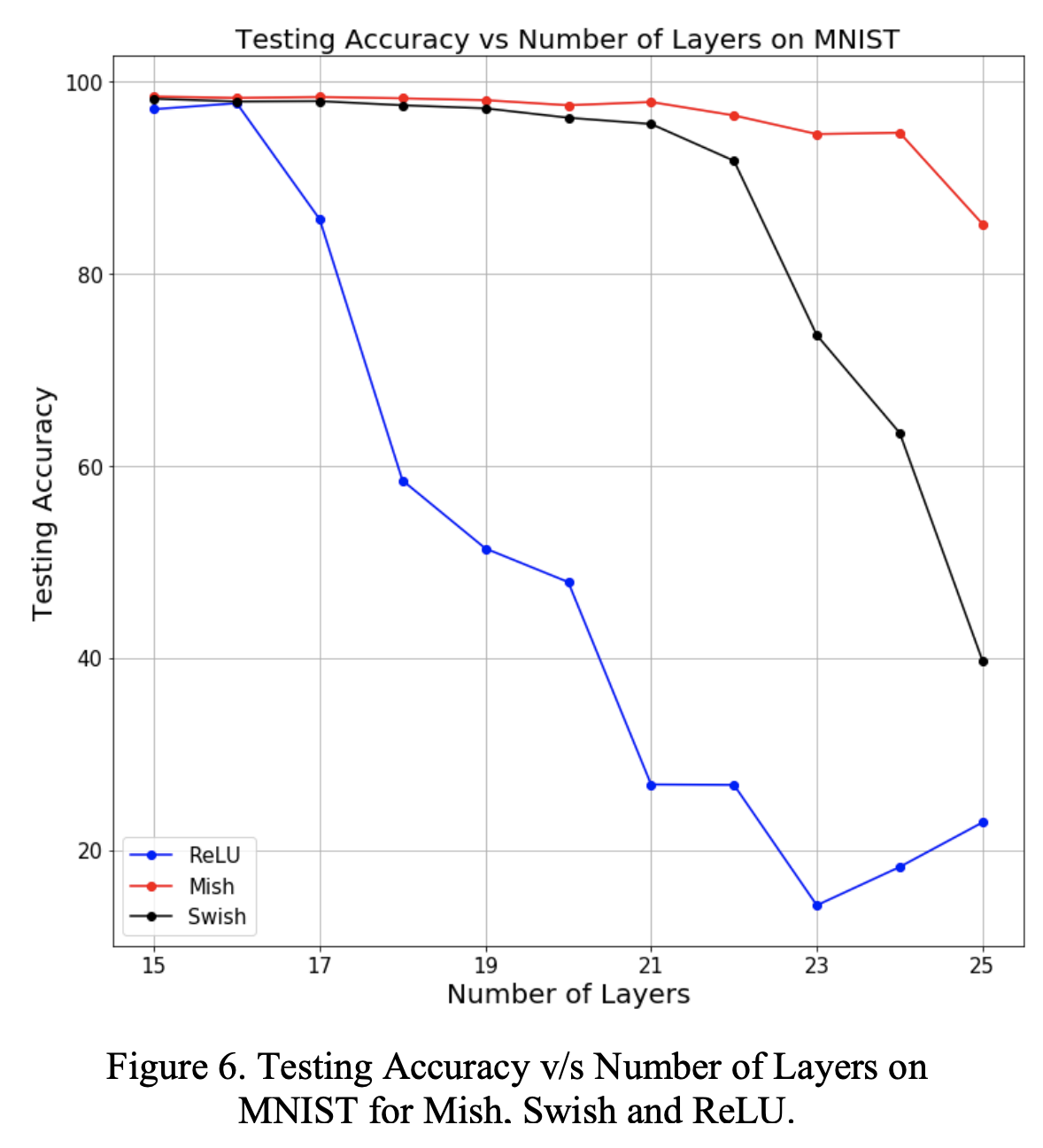

2. Mish Experiments

Output Landscape of a Random Neural Network

Testing Accuracy v/s Number of Layers on MNIST

Test Accuracy v/s Batch Size on CIFAR-10

Reference

https://arxiv.org/pdf/1908.08681.pdf

Other Activation Functions

Rectified Activations: https://arxiv.org/pdf/1505.00853.pdf

Sparsemax: https://arxiv.org/pdf/1602.02068.pdf

Comments